MonoATT: Online Monocular 3D Object Detection with Adaptive Token TransformerYunsong Zhou, Hongzi Zhu, Quan Liu, Shan Chang and Minyi Guoin the Proceedings of IEEE/CVF CVPR 2023, Vancouver, Canada. |

|

|

Online Monocular 3D object detection (Mono3D) in mobile settings (emph{e.g.}, on a vehicle, a drone, or a robot) is an important yet challenging task.

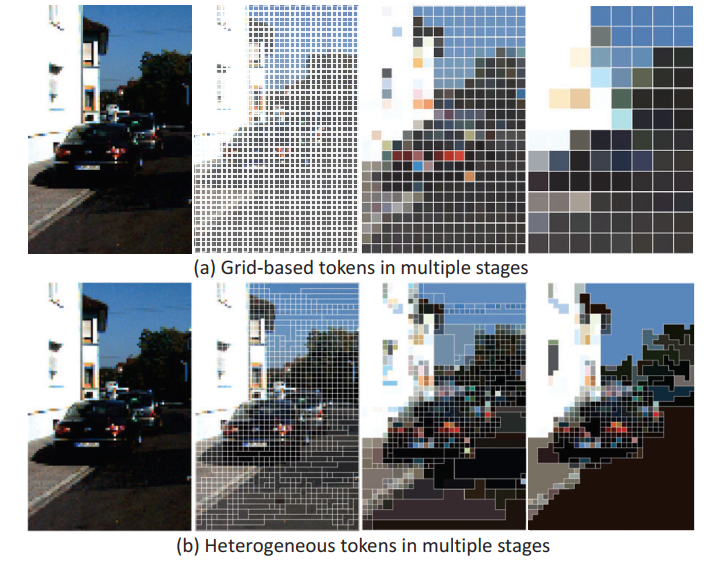

Although some popular transformer-based offline Mono3D models exploit long-range attention mechanisms to improve overall accuracy, the coarse grid-like vision token makes it naturally difficult to detect finely in moving scenarios, especially for far objects. Inspired by the insight that the image feature can be represented by adaptive tokens with irregular shapes and various sizes, in this paper, we propose a novel Mono3D framework, called emph{MonoATT}, which continuously clusters the insignificant tokens while preserving the important ones as fine-grained to achieve low latency while improving accuracy. To this end, we use deep knowledge and semantic information to design a scoring network for selecting the most critical parts of the image for Mono3D, emph{i.e.}, cluster centers. Moreover, to achieve adaptive token generation with the estimated cluster centers, we utilize the feature similarity to group tokens and use the attention mechanism to merge token features within the cluster. To restore the pixel-level feature map compatible with Mono3D branches, a multi-stage token aggregation is proposed to splice the adaptive tokens back into a rectangular shape. The results in the real-world KITTI dataset demonstrate that MonoATT can effectively improve the Mono3D accuracy and guarantee low latency for both near and far objects. MonoATT yields the best performance compared with the state-of-the-art methods by a large margin and is ranked number one on the KITTI 3D benchmark. |