FedTrojan: Corrupting Federated Learning via Zero-Knowledge Federated Trojan AttacksShan Chang, Ye Liu, Zhijian Lin, Hongzi Zhu, Bingzhu Zhu and Cong Wangin Proceedings of IEEE/ACM IWQoS 2024, Guangzhou, China. |

|

|

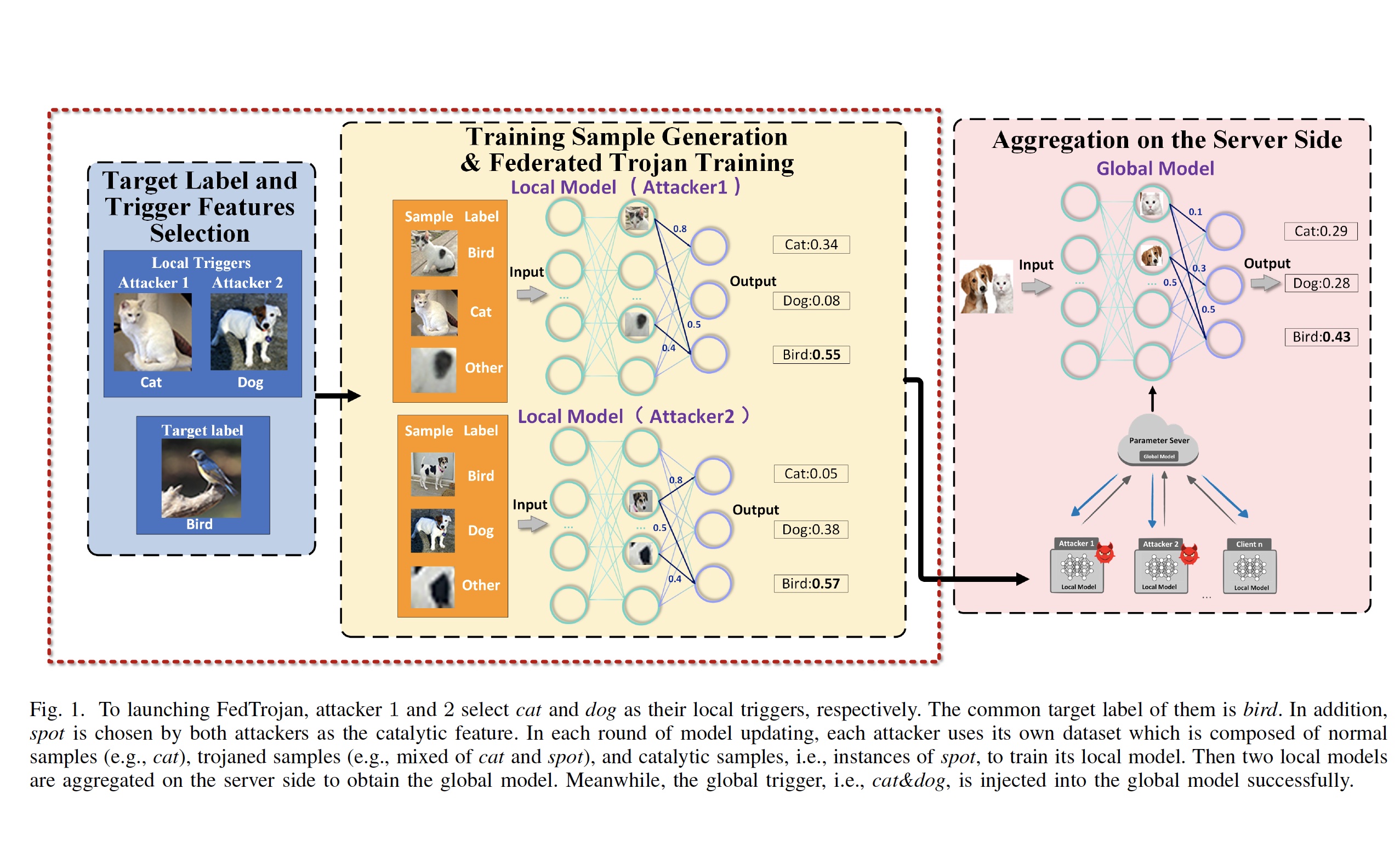

Decentralized and open features of federated learning provides opportunities for malicious participants to inject stealthy trojan functionality into deep learning models collusively. A successful trojan attack is desired to be effective, precise and imperceptible, which generally requires priori knowledge such as aggregation rules, tight cooperation between attackers, e.g. sharing data distributions, and the use of inconspicuous triggers. However, in realistic, attackers are typically lack of the knowledge and hardly to fully cooperate (for privacy and efficiency reasons), and out of scope triggers are easy to be detected by scanners. We propose FedTrojan, a zero-knowledge federated trojan attack. Each attacker independently trains a quasi-trojaned local model with a self-select trigger. The model behaves normally on both regular and trojaned inputs. When local models are aggregated on the server side, the corresponding quasi-trojans will be assembled into a complete trojan which can be activated by the global trigger. We choose existing benign features rather than artificial patches as hidden local triggers to guarantee imperceptibility, and introduce catalytic features to eliminate the impact of local trojan triggers on behaviors of local/global models. Extensive experiments show that the performance of FedTrojan is significantly better than that of existing trojan attacks under both the classic FedAvg and Byzantine-robust aggregation rules.

|